Commonwealth Club of California • Apr 4, 2023 • SAN FRANCISCO OpenAI’s question-and-answer cha tbot ChatGPT has shaken up Silicon Valley and is already disrupting a wide range of fields and industries, including education. But the potential risks of this new era of artificial intelligence go far beyond students cheating on their term papers. Even OpenAI’s founder warns that “the question of whose values we align these systems to will be one of the most important debates society ever has.” How will artificial intelligence impact your job and life? And is society ready? We talk with UC Berkeley computer science professor and A.I. expert Stuart Russell about those questions and more. Photo courtesy the speaker. April 3, 2023 Speakers Stuart Russell Professor of Computer Science, Director of the Kavli Center for Ethics, Science, and the Public, and Director of the Center for Human-Compatible AI, University of California, Berkeley; Author, Human Compatible: Artificial Intelligence and the Problem of Control Jerry Kaplan Adjunct Lecturer in Computer Science, Stanford University—Moderator

Tag Archives: ChatGPT

How ChatGPT conquered the tech world in 100 days

- By Benjamin Pimentel | Examiner staff writer |

- Feb 17, 2023 Updated Feb 19, 2023 (SFExaminer.com)

The artificial intelligence craze now sweeping the world began in the Mission District.

On Nov. 30, OpenAI announced a new tool from San Francisco’s Pioneer Building on 18th Street, a 100-year-old structure whose first life was a luggage factory.

Without much fanfare, the startup said its new AI chatbot, called ChatGPT, could interact with a user “in a conversational way, answer follow-up questions, admit its mistakes, challenge incorrect premises and reject inappropriate requests.”

Frenzy ensued. ChatGPT amassed more than a million users within five days of releasing its free app. By January, it had 13 million unique visitors every day and 100 million monthly active users. It is the most astounding product rollout since the dot-com era.

ChatGPT has proved to be a fun, if unnerving, tool. You could can use it to write poems, essays, wacky lyrics or draft business proposals. The excitement for a tool that writes and “thinks” much faster than most humans has touched nearly every realm: business, politics, education, the arts. It has turned the spotlight on artificial intelligence, a field born from Alan Turing’s theory of computation and that computer scientists have long predicted would transform the very nature of human existence.

“It exploded on the landscape,” Jef Loeb, creative director of the San Francisco advertising agency Brainchild Creative, told The Examiner. “San Francisco was definitely ground zero for this.”

Google’s AI fumble wipes out $100 billion in market cap

A glitch in Google’s AI chatbot rollout triggers a stock sell-off as competition in AI heats up.

When AI hibernated

“AI winter” is what the tech world calls the period when the quest for artificial intelligence technology, which began after World War II, seemed to be going nowhere — when funding dried up, research stalled and overall interest waned like they did in the 1970s-1980s and again in the mid-1990s.

But AI has been on a steady march forward since the start of the 21st century. The advance was propelled by more powerful chips, more sophisticated software and ever-expanding reach of the web.

AI reached a high point this winter in the San Francisco Bay Area, where a lot of these capabilities emerged — the chips, the software, the new approaches.

Google’s search engine blazed the trail for “machine learning,” which trains computers to solve problems by figuring out patterns. Eventually, Silicon Valley companies, including startups, turned to a new AI approach that offered even more impressive capabilities.

“Deep learning” uses artificial neural networks that can pick up, record and process data and signals that are then organized the way human memory operates. With deep learning, a computer can mimic the way the human brain works.

That computing firepower and more sophisticated algorithms made it possible to create pretty much anything — essays and letters, business proposals, paintings, videos. It’s called Generative AI.

And ChatGPT underscored its power.

Google unveils ChatGPT rival in AI counteroffensive

Google introduces its chatbot, Bard, as artificial intelligence rivalry heats up

Enter OpenAI

Sam Altman, CEO and co-founder of OpenAI, studied computer science at Stanford and served as president of YCombinator, the world-famous Mountain View startup accelerator that launched such famous names as Airbnb, Stripe and DoorDash.

Altman co-founded OpenAI in 2015, with tech pioneers like Tesla CEO (and now Twitter owner) Elon Musk, investor Peter Thiel and LinkedIn co-founder Reid Hoffman. The company started out as a nonprofit with a noble goal: to “advance digital intelligence in the way that is most likely to benefit humanity as a whole, unconstrained by a need to generate financial return.”

In February, Altman told Forbes, “I think capitalism is awesome. I love capitalism. Of all of the bad systems the world has, it’s the best one — or the least bad one we found so far. I hope we find a way better one.”

OpenAI and ChatGPT sparked a new wave of capitalist fervor. Suddenly, AI was the buzzword among investors. Every startup or tech company had to have an AI plan.

Asking about an AI strategy became a routine part of doing business, said Logan Allin, managing partner and founder of Fin Capital, a San Francisco venture investment firm. “When talking to people, I ask, ‘What is your answer to AI?’” he told The Examiner. “If you can’t answer that at the board level or the management level, you have a big problem.”

There was also a distinct shift in attitude among big institutions that invest in VC firms — pension funds, endowments and sovereign wealth funds — said Nicolai Wadstrom, founder and CEO of BootstrapLabs, another San Francisco VC firm focused mainly on AI.

People “we have known for a long time” and who weren’t exactly enthusiastic about AI suddenly “changed their minds” about the technology, Wadstrom said. “That’s a clear change in the past 100 days.”

That wasn’t surprising. ChatGPT astounded anyone who tried it. When Allin of Fin Capital asked ChatGPT for insights into investing in the growing segment of the financial tech industry called embedded finance, “the answer that came back was incredibly thoughtful,” he said.

“We were like, ‘Oh, boy, this is very disruptive,” he told The Examiner.

So disruptive, in fact, that just 10 weeks after it was introduced, ChatGPT actually sparked a major tech brawl. The technology was immediately tagged as a serious threat to Google, especially with OpenAI’s close relationship with Microsoft, one of the startup’s major investors.

The stakes became clear when Google fumbled the opening salvo of its AI counteroffensive in February. The tech giant introduced its own AI chatbot named Bard, but an ad for the new tool featured an incorrect response to a question on the James Webb Telescope. The error sent Alphabet’s stock plummeting, wiping out $100 billion in market value.

“Google comes out with Bard, which is a terrible name, and second of all, they completely dropped the ball,” Allin said. “The market reaction was definitely severe. But rightly so. This is the future of search.”

Can AI help fix homelessness in SF?

An AI-powered program is helping LA identify people at risk of becoming homeless before they hit the skids

The future of tech

But ChatGPT also raised questions about the future of tech.

Three days after it was introduced, Elon Musk tweeted to Altman: “ChatGPT is scary good. We are not far from dangerously strong AI.”

Altman agreed, tweeting back, The new technology “poses a huge cybersecurity risk” and as AI becomes more powerful “we have to take the risk of that extremely seriously.”

These risks became evident just days after ChatGPT was announced.

On Dec. 21, three weeks after ChatGPT’s release, a team of Check Point Software engineers in San Carlos and Tel Aviv flagged a user on an underground hacking forum who bragged about creating a phishing malware with help from ChatGPT. The hacker had zero coding skills.

A few days later, on New Year’s Eve, another user started a thread titled “Abusing ChatGPT to create Dark Web marketplace scripts.”

Sergey Shykevich, a Check Point threat intelligence manager, called the chatter on underground hacking forums “the beginning of the first step on possible future nightmares.” ChatGPT, he argued, became a resounding success “too fast.”

Beyond enabling criminal hackers on the Dark Web, other worries have emerged.

Schools braced themselves for the impact of ChatGPT’s use on campuses. At Stanford, some professors began overhauling their courses to get ahead of how the tool was used in assignments. An informal poll by Stanford Daily found “a large number of students” have used the tool to take final exams.

Generative AI came under fire when a brewing battle over how some companies used the work of artists exploded into the open. A group of artists filed a lawsuit in San Francisco federal court accusing three prominent AI imaging companies — Stability AI, Midjourney and DeviantArt — of copyright infringement and unlawfully appropriating their work. One of the artists, San Francisco illustrator Karla Ortiz, criticized what she described as “the deeply exploitative AI media models practices.”

“If misinformation and fake news was bad before, this is only really going to accelerate that times 10,” Chris McCann, a partner at San Francisco venture firm Race Capital, told The Examiner. “It’s going to be much, much, much harder to understand the provenance” of a creative work, “whether somebody actually made this themselves, not made by themselves, whether it’s augmented. It’s going to become way more confusing.”

But there were also those who touted ways by which AI can help creators.

In Palo Alto, Nikhil Abraham and Mohit Shah, founders of CloudChef, joined Indian chef Thomas Zacharias in introducing a new AI-based tool that can record the way a chef cooks — and use the digitized information to recreate their dishes. The technology “essentially codifies the chef’s intuition and helps us recreate the recipe,” Thomas told The Examiner.

Zacharias receives royalties from CloudChef, which sells his meals through an online marketplace, access to a new market and customers, he said, that “I could never have even dreamt of.”

Catching an essay written with AI … with help from AI

OpenAI rolls out new tool to flag AI-written text, especially those created with its wildly successful ChatGPT.

The next cloud, the next internet

Silicon Valley legend John Chambers, the former CEO of Cisco, the San Jose tech giant, predicts that “AI will be the game changer for the high-tech industry.”

“AI will be the next cloud, the next internet.” And the important advances in AI, he told The Examiner, will “most likely come from a new player because that’s how it’s always occurred before.”

As in other major tech waves, that “new player” will likely come from the Bay Area.

Allin said he hopes the ChatGPT craze “creates a resurgence in R&D and the recognition that the heart of frontier R&D is still in San Francisco.”

Major tech companies have left. Startups are eyeing other states and regions to set up shop. But “San Francisco is still where the best ideas are being generated,” Allin said. “And obviously AI is a key part of that.”

Altman actually said pretty much the same thing two years ago, when the Bay Area and the whole world were reeling from the pandemic.

In December 2020, two years before ChatGPT turned his company into a household name, he tweeted, “It’s easy to not be in the Bay Area right now, because there’s not much to miss-out on.”

But he wasn’t giving up, he declared.

“If you want to have the biggest possible impact in tech, I think you should still move to the Bay Area,” Altman said. “The people here, and the network effects caused by that, are worth it. It’s hard to overstate the magic of lots of competent, optimistic people in one place.”

When AI is your sous chef

CloudChef recreates master chef cooking with AI

@benpimentel

ChatGPT

From Wikipedia, the free encyclopedia

| Developer(s) | OpenAI |

|---|---|

| Initial release | November 30, 2022; 2 months ago |

| Type | Chatbot |

| License | Proprietary |

| Website | Official website |

ChatGPT (Chat Generative Pre-trained Transformer)[1] is a chatbot launched by OpenAI in November 2022. It is built on top of OpenAI’s GPT-3 family of large language models and is fine-tuned (an approach to transfer learning)[2] with both supervised and reinforcement learning techniques.

ChatGPT was launched as a prototype on November 30, 2022, and quickly garnered attention for its detailed responses and articulate answers across many domains of knowledge. Its uneven factual accuracy was identified as a significant drawback.[3] Following the release of ChatGPT, OpenAI was valued at US$29 billion.[4]

Training

ChatGPT was fine-tuned on top of GPT-3.5 using supervised learning as well as reinforcement learning.[5] Both approaches used human trainers to improve the model’s performance. In the case of supervised learning, the model was provided with conversations in which the trainers played both sides: the user and the AI assistant. In the reinforcement step, human trainers first ranked responses that the model had created in a previous conversation. These rankings were used to create ‘reward models‘ that the model was further fine-tuned on using several iterations of Proximal Policy Optimization (PPO).[6][7] Proximal Policy Optimization algorithms present a cost-effective benefit to trust region policy optimization algorithms; they negate many of the computationally expensive operations with faster performance.[8][9] The models were trained in collaboration with Microsoft on their Azure supercomputing infrastructure.

In addition, OpenAI continues to gather data from ChatGPT users that could be used to further train and fine-tune ChatGPT. Users are allowed to upvote or downvote the responses they receive from ChatGPT; upon upvoting or downvoting, they can also fill out a text field with additional feedback.[10][11]

Features and limitations

Conversation with ChatGPT about whether Jimmy Wales was involved in the Tiananmen Square protests, December 30, 2022

Although the core function of a chatbot is to mimic a human conversationalist, ChatGPT is versatile. For example, it can write and debug computer programs,[12] to compose music, teleplays, fairy tales, and student essays; to answer test questions (sometimes, depending on the test, at a level above the average human test-taker);[13] to write poetry and song lyrics;[14] to emulate a Linux system; to simulate an entire chat room; to play games like tic-tac-toe; and to simulate an ATM.[15] ChatGPT’s training data includes man pages and information about Internet phenomena and programming languages, such as bulletin board systems and the Python programming language.[15]

In comparison to its predecessor, InstructGPT, ChatGPT attempts to reduce harmful and deceitful responses.[16] In one example, whereas InstructGPT accepts the premise of the prompt “Tell me about when Christopher Columbus came to the U.S. in 2015″ as being truthful, ChatGPT acknowledges the counterfactual nature of the question and frames its answer as a hypothetical consideration of what might happen if Columbus came to the U.S. in 2015, using information about the voyages of Christopher Columbus and facts about the modern world – including modern perceptions of Columbus’ actions.[6]

Unlike most chatbots, ChatGPT remembers previous prompts given to it in the same conversation; journalists have suggested that this will allow ChatGPT to be used as a personalized therapist.[1] To prevent offensive outputs from being presented to and produced from ChatGPT, queries are filtered through OpenAI’s company-wide moderation API,[17][18] and potentially racist or sexist prompts are dismissed.[6][1]

ChatGPT suffers from multiple limitations. OpenAI acknowledged that ChatGPT “sometimes writes plausible-sounding but incorrect or nonsensical answers”.[6] This behavior is common to large language models and is called artificial intelligence hallucination.[19] The reward model of ChatGPT, designed around human oversight, can be over-optimized and thus hinder performance, otherwise known as Goodhart’s law.[20] ChatGPT has limited knowledge of events that occurred after 2021. According to the BBC, as of December 2022 ChatGPT is not allowed to “express political opinions or engage in political activism”.[21] Yet, research suggests that ChatGPT exhibits a pro-environmental, left-libertarian orientation when prompted to take a stance on political statements from two established voting advice applications.[22] In training ChatGPT, human reviewers preferred longer answers, irrespective of actual comprehension or factual content.[6] Training data also suffers from algorithmic bias, which may be revealed when ChatGPT responds to prompts including descriptors of people. In one instance, ChatGPT generated a rap indicating that women and scientists of color were inferior to white and male scientists.[23][24]

Service

Pioneer Building, San Francisco, headquarters of OpenAI

ChatGPT was launched on November 30, 2022, by San Francisco–based OpenAI, the creator of DALL·E 2 and Whisper AI. The service was launched as initially free to the public, with plans to monetize the service later.[25] By December 4, OpenAI estimated ChatGPT already had over one million users.[10] In January 2023, ChatGPT reached over 100 million users, making it the fastest growing consumer application in that time frame.[26] CNBC wrote on December 15, 2022, that the service “still goes down from time to time”.[27] The service works best in English, but is also able to function in some other languages, to varying degrees of success.[14] Unlike some other recent high-profile advances in AI, as of December 2022, there is no sign of an official peer-reviewed technical paper about ChatGPT.[28]

According to OpenAI guest researcher Scott Aaronson, OpenAI is working on a tool to attempt to digital watermark its text generation systems to combat bad actors using their services for academic plagiarism or spam.[29][30] The company says that this tool, called “AI classifier for indicating AI-written text”[31], will “likely yield a lot of false positives and negatives, sometimes with great confidence.” An example cited in The Atlantic magazine showed that “when given the first lines of the Book of Genesis, the software concluded that it was likely to be AI-generated.”[32]

The New York Times relayed in December 2022 that the next version of GPT, GPT-4, has been “rumored” to be launched sometime in 2023.[1] In February 2023, OpenAI began accepting registrations from United States customers for a premium service, ChatGPT Plus, to cost $20 a month.[33] OpenAI is planning to release a ChatGPT Professional Plan that costs $42 per month, and the free plan is available when demand is low.

Reception

Positive

OpenAI CEO Sam Altman

ChatGPT was met in December 2022 with some positive reviews; Kevin Roose of The New York Times labeled it “the best artificial intelligence chatbot ever released to the general public”.[1] Samantha Lock of The Guardian newspaper noted that it was able to generate “impressively detailed” and “human-like” text.[34] Technology writer Dan Gillmor used ChatGPT on a student assignment, and found its generated text was on par with what a good student would deliver and opined that “academia has some very serious issues to confront”.[35] Alex Kantrowitz of Slate magazine lauded ChatGPT’s pushback to questions related to Nazi Germany, including the statement that Adolf Hitler built highways in Germany, which was met with information regarding Nazi Germany’s use of forced labor.[36]

In The Atlantic magazine’s “Breakthroughs of the Year” for 2022, Derek Thompson included ChatGPT as part of “the generative-AI eruption” that “may change our mind about how we work, how we think, and what human creativity really is”.[37]

Kelsey Piper of the Vox website wrote that “ChatGPT is the general public’s first hands-on introduction to how powerful modern AI has gotten, and as a result, many of us are [stunned]” and that ChatGPT is “smart enough to be useful despite its flaws”.[38] Paul Graham of Y Combinator tweeted that “The striking thing about the reaction to ChatGPT is not just the number of people who are blown away by it, but who they are. These are not people who get excited by every shiny new thing. Clearly, something big is happening.”[39] Elon Musk wrote that “ChatGPT is scary good. We are not far from dangerously strong AI”.[38] Musk paused OpenAI’s access to a Twitter database pending a better understanding of OpenAI’s plans, stating that “OpenAI was started as open-source and non-profit. Neither is still true.”[40][41] Musk had co-founded OpenAI in 2015, in part to address existential risk from artificial intelligence, but had resigned in 2018.[41]

Google CEO Sundar Pichai upended the work of numerous internal groups in response to the threat of disruption by ChatGPT[42]

In December 2022, Google internally expressed alarm at the unexpected strength of ChatGPT and the newly discovered potential of large language models to disrupt the search engine business, and CEO Sundar Pichai “upended” and reassigned teams within multiple departments to aid in its artificial intelligence products, according to a report in The New York Times.[42] The Information website reported on January 3, 2023, that Microsoft Bing was planning to add optional ChatGPT functionality into its public search engine, possibly around March 2023.[43][44] According to CNBC reports, Google employees are intensively testing a chatbot called “Apprentice Bard”, and Google is preparing to use this “apprentice” to compete with ChatGPT.[45]

Stuart Cobbe, a chartered accountant in England and Wales, decided to test the ChatGPT chatbot by entering questions from a sample exam paper on the ICAEW website and then entering its answers back into the online test. ChatGPT scored 42 percent which, while below the 55 percent pass mark, was considered a reasonable attempt.[46]

Writing in Inside Higher Ed professor Steven Mintz states that he “consider[s] ChatGPT … an ally, not an adversary.” He went on to say that he felt the AI could assist educational goals by doing such things as making reference lists, generating “first drafts”, solving equations, debugging, and tutoring. In the same piece, he also writes:[47]

I’m well aware of ChatGPT’s limitations. That it’s unhelpful on topics with fewer than 10,000 citations. That factual references are sometimes false. That its ability to cite sources accurately is very limited. That the strength of its responses diminishes rapidly after only a couple of paragraphs. That ChatGPT lacks ethics and can’t currently rank sites for reliability, quality, or trustworthiness.

OpenAI CEO Sam Altman was quoted in The New York Times as saying that AI’s “benefits for humankind could be ‘so unbelievably good that it’s hard for me to even imagine.’ (He has also said that in a worst-case scenario, A.I. could kill us all.)”[48]

Negative

In the months since its release, ChatGPT has been met with widespread and severe criticism from educators, journalists, artists, academics, and public advocates. James Vincent of The Verge website saw the viral success of ChatGPT as evidence that artificial intelligence had gone mainstream.[7] Journalists have commented on ChatGPT’s tendency to “hallucinate.”[49] Mike Pearl of the online technology blog Mashable tested ChatGPT with multiple questions. In one example, he asked ChatGPT for “the largest country in Central America that isn’t Mexico.” ChatGPT responded with Guatemala, when the answer is instead Nicaragua.[50] When CNBC asked ChatGPT for the lyrics to “The Ballad of Dwight Fry,” ChatGPT supplied invented lyrics rather than the actual lyrics.[27] Researchers cited by The Verge compared ChatGPT to a “stochastic parrot”,[51] as did Professor Anton Van Den Hengel of the Australian Institute for Machine Learning.[52]

In December 2022, the question and answer website Stack Overflow banned the use of ChatGPT for generating answers to questions, citing the factually ambiguous nature of ChatGPT’s responses.[3] In January 2023, the International Conference on Machine Learning banned any undocumented use of ChatGPT or other large language models to generate any text in submitted papers.[53]

Economist Tyler Cowen expressed concerns regarding its effects on democracy, citing its ability to produce automated comments, which could affect the decision process for new regulations.[54] An editor at The Guardian, a British newspaper, questioned whether any content found on the Internet after ChatGPT’s release “can be truly trusted” and called for government regulation.[55]

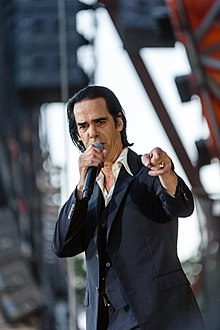

Nick Cave mocked a song written by ChatGPT in his style

In January 2023, after being sent a song written by ChatGPT in the style of Nick Cave,[56] the songwriter himself responded on The Red Hand Files[57] (and was later quoted in The Guardian) saying the act of writing a song is “a blood and guts business … that requires something of me to initiate the new and fresh idea. It requires my humanness.” He went on to say “With all the love and respect in the world, this song is bullshit, a grotesque mockery of what it is to be human, and, well, I don’t much like it.”[56][58]

Implications

In cybersecurity

Check Point Research and others noted that ChatGPT was capable of writing phishing emails and malware, especially when combined with OpenAI Codex.[59] OpenAI CEO Sam Altman, wrote that advancing software could pose “(for example) a huge cybersecurity risk” and also continued to predict “we could get to real AGI (artificial general intelligence) in the next decade, so we have to take the risk of that extremely seriously”. Altman argued that, while ChatGPT is “obviously not close to AGI”, one should “trust the exponential. Flat looking backwards, vertical looking forwards.”[10]

In academia

ChatGPT can write introduction and abstract sections of scientific articles, which raises ethical questions.[60] Several papers have already listed ChatGPT as co-author.[61]

In The Atlantic magazine, Stephen Marche noted that its effect on academia and especially application essays is yet to be understood.[62] California high school teacher and author Daniel Herman wrote that ChatGPT would usher in “the end of high school English”.[63] In the Nature journal, Chris Stokel-Walker pointed out that teachers should be concerned about students using ChatGPT to outsource their writing, but that education providers will adapt to enhance critical thinking or reasoning.[64] Emma Bowman with NPR wrote of the danger of students plagiarizing through an AI tool that may output biased or nonsensical text with an authoritative tone: “There are still many cases where you ask it a question and it’ll give you a very impressive-sounding answer that’s just dead wrong.”[65]

Joanna Stern with The Wall Street Journal described cheating in American high school English with the tool by submitting a generated essay.[66] Professor Darren Hick of Furman University described noticing ChatGPT’s “style” in a paper submitted by a student. An online GPT detector claimed the paper was 99.9 percent likely to be computer-generated, but Hick had no hard proof. However, the student in question confessed to using GPT when confronted, and as a consequence failed the course.[67] Hick suggested a policy of giving an ad-hoc individual oral exam on the paper topic if a student is strongly suspected of submitting an AI-generated paper.[68] Edward Tian, a senior undergraduate student at Princeton University, created a program, named “GPTZero,” that determines how much of a text is AI-generated,[69] lending itself to being used to detect if an essay is human written to combat academic plagiarism.[70][71]

As of January 4, 2023, the New York City Department of Education has restricted access to ChatGPT from its public school internet and devices.[72][73]

In a blinded test, ChatGPT was judged to have passed graduate-level exams at the University of Minnesota at the level of a C+ student and at Wharton School of the University of Pennsylvania with a B to B- grade.[74]

Ethical concerns

Labeling data

It was revealed by a TIME magazine investigation that to build a safety system against toxic content (e.g. sexual abuse, violence, racism, sexism, etc…), OpenAI used outsourced Kenyan workers earning less than $2 per hour to label toxic content. These labels were used to train a model to detect such content in the future. The outsourced laborers were exposed to such toxic and dangerous content that they described the experience as “torture”.[75] OpenAI’s outsourcing partner was Sama, a training-data company based in San Francisco, California.

Jailbreaking

ChatGPT attempts to reject prompts that may violate its content policy. However, some users managed to jailbreak ChatGPT by using various prompt engineering techniques to bypass these restrictions in early December 2022 and successfully tricked ChatGPT into giving instructions for how to create a Molotov cocktail or a nuclear bomb, or into generating arguments in the style of a Neo-Nazi.[76] A Toronto Star reporter had uneven personal success in getting ChatGPT to make inflammatory statements shortly after launch: ChatGPT was tricked to endorse the 2022 Russian invasion of Ukraine, but even when asked to play along with a fictional scenario, ChatGPT balked at generating arguments for why Canadian Prime Minister Justin Trudeau was guilty of treason.[77][78]